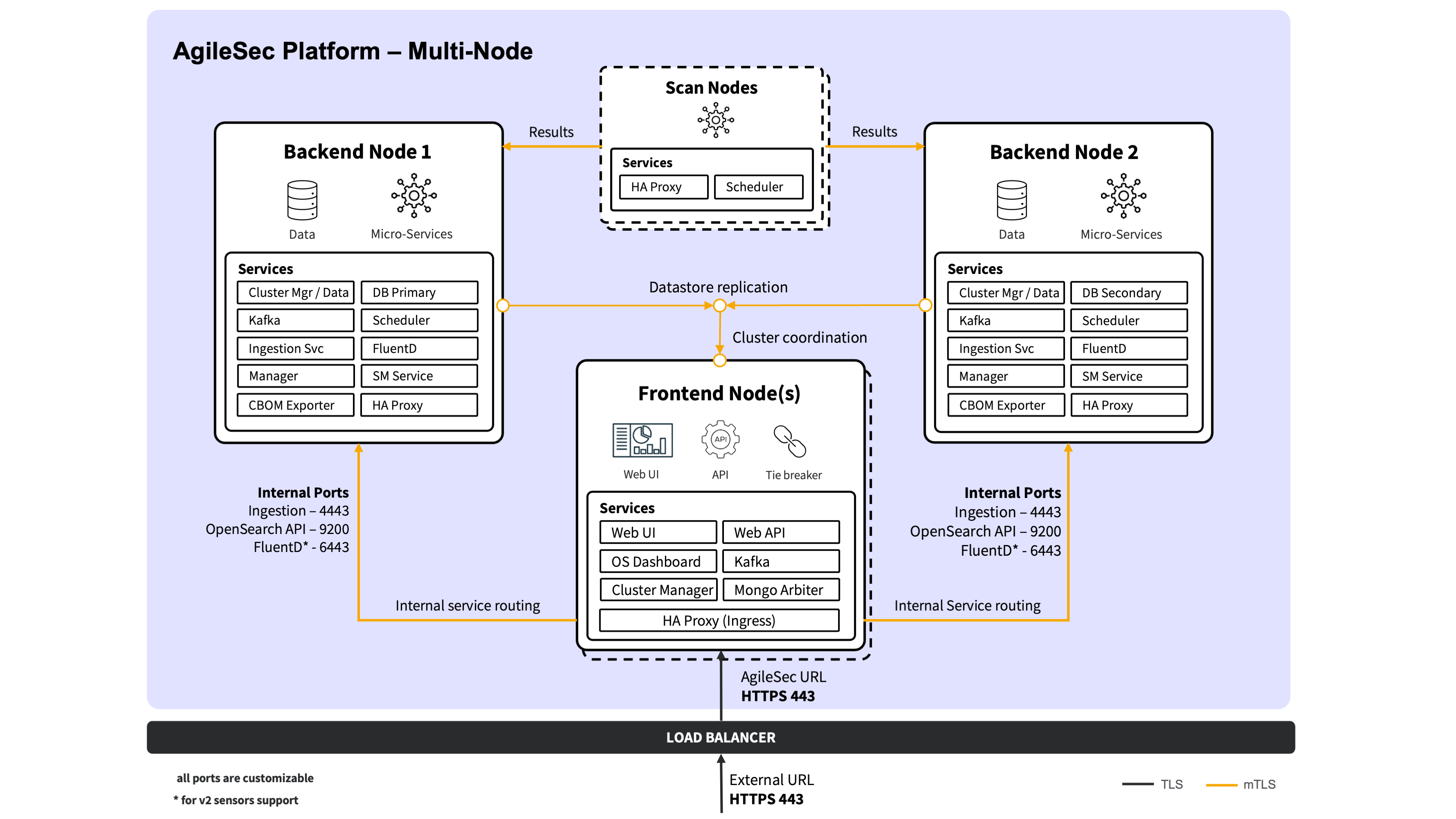

On-Prem Multi-Node Installation Guide

This On-Prem Multi-Node Installation Guide applies for version 3.4.

Overview

The multi-node installation creates a distributed cluster with specialized nodes. It is recommended for:

Production environments

High availability requirements

Large data volumes

Backend Nodes

Runs following services:

Services | Description |

|---|---|

Opensearch Data Node | Indexes and stores findings data received from sensors |

Opensearch Master Node | Manages OpenSearch cluster state and configuration |

MongoDB Primary or Secondary Node | Stores operational and configuration data for the platform. The primary MongoDB node runs on first backend node, and secondary MongoDB nodes run on all subsequent backend nodes |

Kafka | Central communication backbone for the platform. It carries job requests,execution events, raw findings, processed findings, control messages, and system events for asynchronous processing across platform |

Ingestion Service | Always-on ingestion endpoint for receiving data streams from sensors. It publishes data to the Kafka pipeline for further processing |

Manager Service | Always-on service responsible for job lifecycle management and post-scan orchestration |

Scheduler Service | Always-on orchestration service responsible for initiating jobs |

Secrets Management Service | Always-on service responsible for secure key management, token generation and encryption across the platform |

Fluentd | Always-on service that consumes raw findings events from Kafka, applies transformations, and indexes them into the OpenSearch Findings Datastore |

HAProxy | Load balancer and reverse proxy for routing traffic between nodes |

Frontend Nodes

Runs following services:

Services | Description |

|---|---|

Web UI | External web interface for the platform |

Web API | API services for supporting Web UI |

Opensearch Dashboards | Self-service tool to perform advanced searches, create custom visualizations, and build tailored reports for findings |

Opensearch Master Node | Manages OpenSearch cluster state and configuration. Does not store data |

MongoDB Arbiter | Primary election for MongoDB cluster. Does not store data |

HAProxy | Internal load balancer and reverse proxy for routing traffic for all services |

CBOM Exporter | Handles CBOM export generation |

Scan Nodes

Scan Nodes are an optional component for scalability needs. The following services run on scan-node:

Services | Description |

|---|---|

HAProxy | Proxy for routing traffic to backend nodes |

Scheduler Service | Always-on orchestration service responsible for initiating jobs |

Prerequisites

Operating System Requirements

The following Linux operating systems are supported:

Officially supported: Red Hat Enterprise Linux (RHEL) 8 and 9+

Compatible (expected to work): CentOS 8 or 9+, Alma Linux 8 or 9+

System requirements:

Requirements | Backend (Minimum) | Backend (Production) | Scan-node | Frontend | Additional-Frontends |

|---|---|---|---|---|---|

CPU cores | 4 | 8 | 2 | 4 | 2 |

Memory | 32GB | 64GB - small scan volume | 8GB | 16GB | 16GB |

Disk space | 50GB | 100GB - small scan volume | 50GB | 50GB | 50GB |

Ports:

The following default ports are used by various internal components. All ports are configurable.

Service | Default Port | Ingress | Node Type |

|---|---|---|---|

HA Proxy | 8443 | Yes | Frontend |

Web UI | 3000 | No | Frontend |

Web API | 7443 | No | Frontend |

OpenSearch Dashboards | 5443 | No | Frontend |

OpenSearch | 9200/9300 | Yes | Frontend and Backend |

Ingestion Service | 4443 | No | Backend |

FluentD | 6443 | No | Backend |

Analytics Manager Service | 3443 | No | Backend |

Secrets Management Service | 2443 | No | Backend |

Scheduler Service | 1443 | No | Backend and ScanNode |

Kafka | 9092/9093/9094 | Yes | Frontend and Backend |

MongoDB | 27017 | Yes | Frontend and Backend |

SM-router (HA Proxy) | 20443 | Yes | Frontend and Backend |

Analytics-manager-router (HA Proxy) | 30443 | Yes | Frontend and Backend |

Ingestion-router (HA Proxy) | 40443 | Yes | Frontend and Backend |

Opensearch-router (HA Proxy) | 50443 | Yes | Frontend and Backend |

CBOM Exporter | 11443 | No | Frontend |

Network connectivity between cluster nodes (for multi-node installations), including any firewall rules to allow network traffic on specific ports listed above.

Certificate Requirements

The platform uses mutual TLS (mTLS) for secure authenticated communication between all services and server components.

All required certificates can be generated and self-signed or you can provide your own certificates. For detailed information and instructions, see On-Prem Certificates Management Guide.

generate_cert.sh generates following certificates:

Type | Purpose | Files |

|---|---|---|

CA Cert | For self signing certificates generated by Location: |

|

External (user facing) certificate | TLS certificate for frontend proxy. This certificate is presented to anyone accessing the AgileSec Platform and is used for all ingress TLS traffic to the platform endpoint. Ideally, it should be issued by a publicly trusted (public) Certificate Authority to ensure browser and client trust. Nodes: Frontent Location: |

|

Client Certificates | mTLS client authentication for internal service-to-service communication. Set config setting Nodes: All Location:

|

|

Internal server certificates | TLS certificates for all internal services. By default, a single wild card certificate is generated and used by all internal services. Nodes: All Location: |

|

Admin client certificates | Admin user for OpenSearch and MongoDB post install setup. Only required during installation or post install setup Nodes: All Location: |

|

SM service keystore | Required by SM service for storing encryption keys. Nodes: All Location: |

|

IdP certificate | SAML Identity Provider (IdP) signing certificate Nodes: Frontend Location: |

|

It is recommended to run generate_cert.sh to see list of files and locations. Generate the certificates on the first node (backend-1), then copy them to the other nodes, following the filename conventions above.

Domain Name Requirements

A single FQDN is required. Following is recommended:

agilesec.<external domain>

For example: agilesec.dilithiumbank.com

Installation Steps for a 3-node Cluster

This section covers the steps for installing a three-node cluster with the following node types:

Frontend 1

Backend 1

Backend 2

For instructions on installing an optional Scan node, refer to the HA guide.

Step 1: Prepare the Environment

Ensure that you have the installer package and that your nodes meet the minimum CPU and memory requirements.

For easier file transfers between nodes, we also recommend setting up SSH key-based passwordless access from backend-1 to all other nodes, since certificates and other configuration files are generated on backend-1. We also recommend using the same directory names on each node when copying files between machines.

Download the installation zip archive from the Keyfactor download portal.

Extract the zip archive to your preferred location. We recommend to using

agilesec-analytics:

unzip -d <installer_directory> <installer_package>.zip

cd <installer_directory>Ensure the installation script is executable:

cd <installer_directory>

chmod +x install_analytics.shEnvironment configuration is required before starting the installation. A sample configuration file for a multi-node installation is available at

generate_envs/multi_node_config.conf. Edit this file based on your installation type. At a minimum, you must provide the private IP addresses of all your nodes (be-1,be-2,fe-1). The following additional settings can be overridden based on your environment:

Setting | Purpose | Default |

|---|---|---|

| IP address of frontend 1 | Default value is empty. It must be specified |

| IP address of backend 1 | Default value is empty. It must be specified |

| IP address of backend 2 | Default value is empty. It must be specified |

| Organization name used by the platform |

|

| Primary external-facing hostname (FQDN host portion) for the platform |

|

| Primary external-facing domain for the platform |

|

| External-facing port for accessing the platform |

|

| Base Distinguished Name (DN) used to generate server certificates |

|

| Base DN used to generate internal client certificates |

|

| Enables support for v2 sensors |

|

Generate config file for multi-node installation.

./generate_envs/generate_envs.sh -t multi-nodeFollowing files will be generated: - <installer_directory>/generate_envs/generated_envs/env.backend-1 - <installer_directory>/generate_envs/generated_envs/env.backend-2 - <installer_directory>/generate_envs/generated_envs/env.frontend-1

generate_envs.sh will copy env.backend01 file to <installation_directory>/.env

Generate certificates

You can generate and self-sign all required certificates using <installer_directory>/certificates/generate_certs.sh. Alternately, you can use certificates issued by your own CA. For POCs and first-time installations, it is recommended that you generate all certificates using generate_certs.sh.

Run following command to generate and self-sign all required certificates. The .env file is required to generate certificates. By default, the script looks for .env file under <installation directory>. This command must be run on backend-1.

The script populates the certificate files under the certificates directory and generates an archived named kf-agilesec.internal-certs.tgz, which your must copy to all other nodes. This archive also includes env.backend-2, env.backend-1, and env.frontend-1 in addition to the certificates.

cd certificates/

./generate_certs.sh[Optional] Using your own certificates

If you are using your own certificates, perform following steps:**

Copy your CA cert chain to

<installation_directory>/certificates/caCopy server and client certificates to

<installation_directory>/certificates/<analytics_internal_domain>.Certificate filenames must match those listed under Certificate Requirements.

7. Copy files to the other nodes from backend-1. For each node, copy kf-agilesec.internal-certs.tgz to <installer_directory>/certificates/.

scp kf-agilesec.internal-certs.tgz $BE-2_IP:<installer_directory>/certificates/

scp kf-agilesec.internal-certs.tgz $FE-1_IP:<installer_directory>/certificates/The scp command is provided as an example. You may use any file transfer method that suits your environment.

Step 2: Install on Backend-1

Ensure you have either a DNS entry for

<analytics_hostname>.<external domain>(recommended) pointing to frontend-1 IP address, or an entry in your/etc/hostsfor<analytics_hostname>.<external domain>pointing to frontend-1 IP address.Make sure settings file

<installer_directory>/.envis present.Run

sudo ./scripts/tune.sh -u <username>to update following:

System settings:

Sysctl settingRecommended valuevm.max_map_count262144fs.file-max65536

Security settings in

/etc/security/limits.conffor file descriptors and number of threads. This is needed by OpenSearch:Setting- nofile 65536- nproc 65536soft memlock unlimitedhard memlock unlimited

/etc/hostsentries:

<private ip> <frontend node 1 hostname>.<analytics_internal_domain>

<private ip> <backend node 1 hostname>.<analytics_internal_domain>

<private ip> <backend node 2 hostname>.<analytics_internal_domain>Install git binary

Alternately, you can perform above steps manually

Run the following command to start the installation, then follow the prompts:

cd <installer_directory>

./install_analytics.sh -u <user> -p <installation-dir> The <installation-dir> is a new, separate directory where the installed files will reside.

To see additional install options, run install_analytics -l. If any required parameters are omitted, the script will prompt you to enter them interactively.

Step 3: Install on Backend-2

1. On Backend 2, follow these steps to unarchive kf-agilesec.internal-certs.tgz and copy .env file to <installer_directory>

cd <installer_directory>/certificates/

tar zxvf kf-agilesec.internal-certs.tgz

cp env.backend-2 ../.env2. Follow steps 2.1, 2.2 and 2.3 from Step 2.

Run the following command to start the installation on backend 2, then follow the prompts:

cd <installer_directory>

./install_analytics.sh -u <user> -p <installation-dir> Step 4: Install on Frontend-1

1. On Frontend 1, follow these steps to unarchive kf-agilesec.internal-certs.tgz and copy .env file to <installer_directory>

cd <installer_directory>/certificates/

tar zxvf kf-agilesec.internal-certs.tgz

cp env.frontend-1 ../.env2. Follow steps 2.1, 2.2 and 2.3 from Step 2.

Run the following command to start the installation on backend 2, then follow the prompts:

cd <installer_directory>

./install_analytics.sh -u <user> -p <installation-dir> At the end of the installation, the installer will display the following access details: - Web UI access information: - Login URL - Admin username - Admin password (the password you provided during installation will not be displayed here) - Ingestion service endpoint for the v3 unified sensor - Ingestion endpoint for v2 sensors

Post-Installation Verification

Verify Service Health

Run ./scripts/manage.sh status on each node to check the status of all services. If any service shows Not running, try restarting it. See managing services for instructions on starting and restarting services.

On Backend 1 you should see 9 services in Running status:

$ ./manage.sh status

SERVICE DESCRIPTION STATUS

------------------------ ---------------------------------------- --------------------

haproxy HAProxy Load Balancer Running (PID: 1660435)

opensearch OpenSearch Search Engine Running (PID: 1660418)

mongodb MongoDB Server Running (PID: 1660778)

kafka Kafka Server Running (PID: 1660784)

scheduler Scheduler Microservice Running (PID: 1660800)

sm Security Manager Microservice Running (PID: 1660895)

ingestion Ingestion Microservice Running (PID: 1660859)

analytics-manager Analytics Manager Microservice Running (PID: 1660891)

td-agent Fluentd Data Collector Running (2 instances)On Backend 2 you should see 9 services in Running status:

$ ./manage.sh status

SERVICE DESCRIPTION STATUS

------------------------ ---------------------------------------- --------------------

haproxy HAProxy Load Balancer Running (PID: 381335)

opensearch OpenSearch Search Engine Running (PID: 381300)

mongodb MongoDB Server Running (PID: 381660)

kafka Kafka Server Running (PID: 381666)

scheduler Scheduler Microservice Running (PID: 381682)

sm Security Manager Microservice Running (PID: 381683)

ingestion Ingestion Microservice Running (PID: 381747)

analytics-manager Analytics Manager Microservice Running (PID: 381774)

td-agent Fluentd Data Collector Running (2 instances)On Frontend 1 you should see 9 services in Running status:

$ ./manage.sh status

SERVICE DESCRIPTION STATUS

------------------------ ---------------------------------------- --------------------

haproxy HAProxy Load Balancer Running (PID: 276293)

opensearch OpenSearch Search Engine Running (PID: 276275)

mongodb MongoDB Server Running (PID: 276602)

kafka Kafka Server Running (PID: 276608)

sm Security Manager Microservice Running (PID: 277548)

opensearch-dashboards OpenSearch Dashboards Running (PID: 277400)

webui Web UI Microservice Running (PID: 276653)

api Web API Microservice Running (PID: 277871)

cbom CBOM Exporter Microservice Running (PID: 277880)Access the Platform UI

To log in to the new Web UI, use the URL displayed at the end of install,

Login URL:

https://<analytics_hostname>.<analytics_domain>:<analytics_port>Username:

admin@<analytics_domain>Password:

For example, using the default settings:

Login URL:

https://analytics.kf-agilesec.com:8443Username:

admin@kf-agilesec.comPassword:

HelloWorld123456!

You will login screen like this:

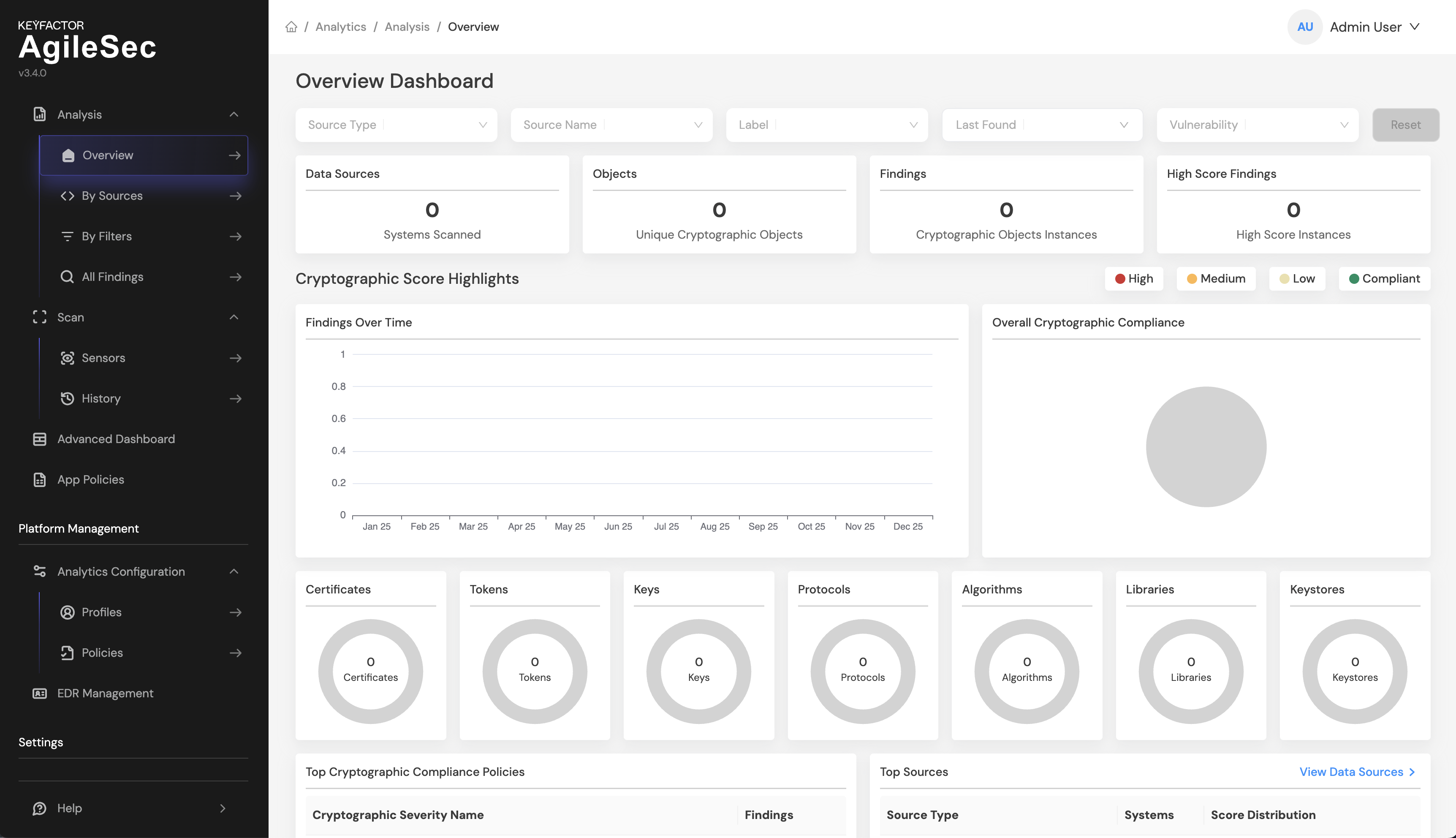

After logging in, the Overview Dashboard should show 0 across all charts, as shown in the screenshot below:

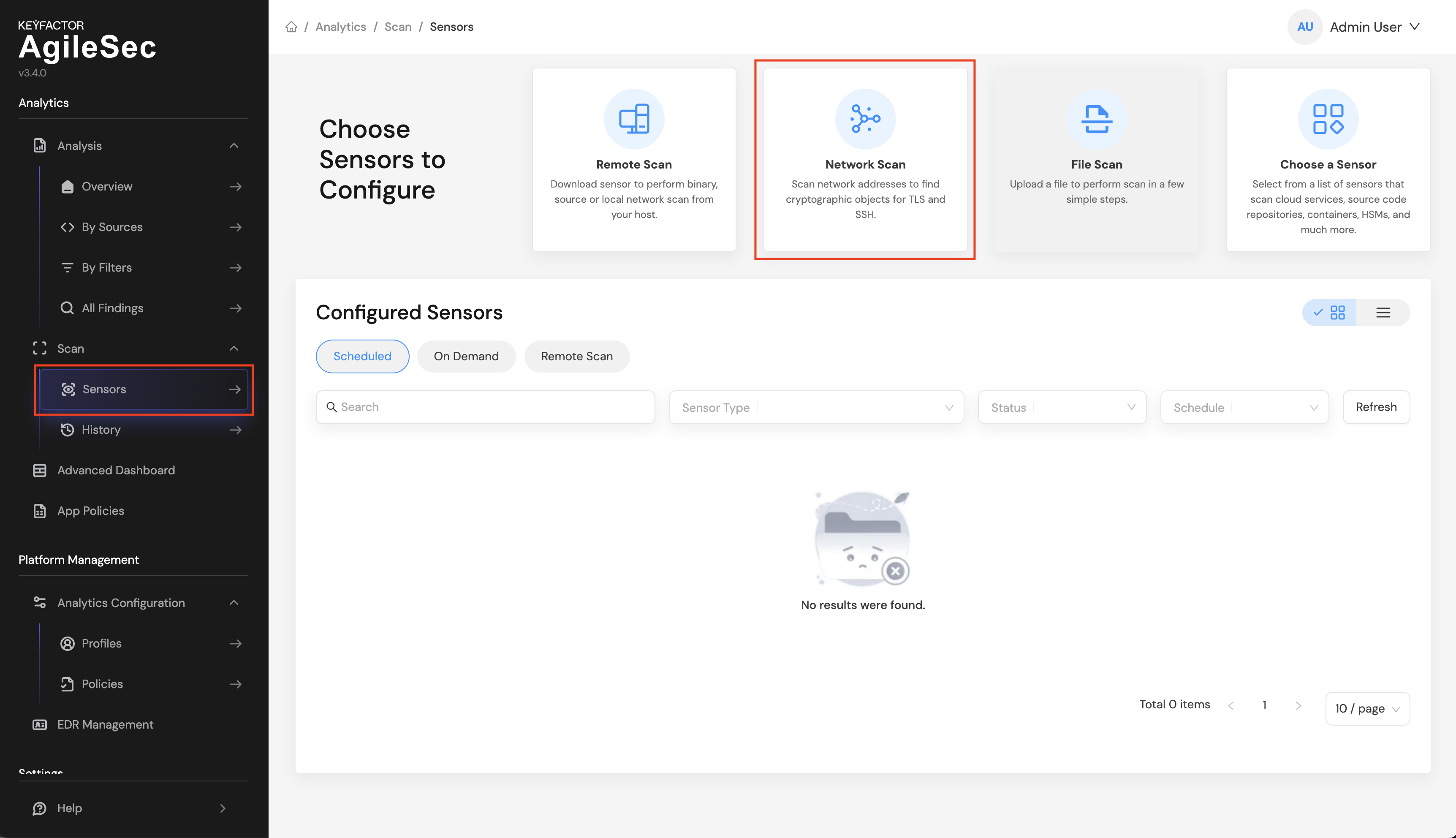

Run a Smoke Test

Follow these steps to run a quick smoke test and confirm the platform is working:

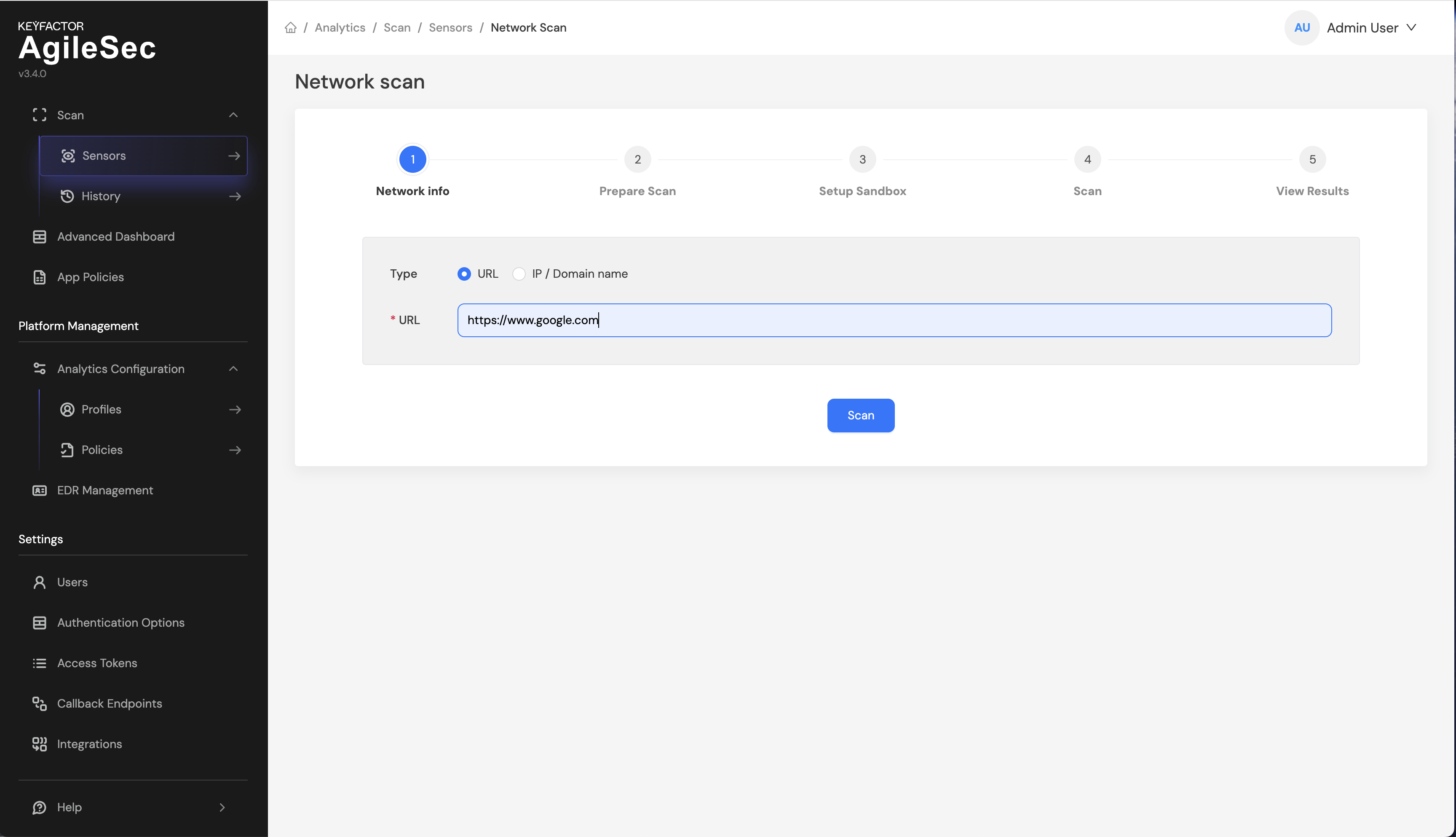

Step 1: In the Web UI, go to Sensors -> Network Scan

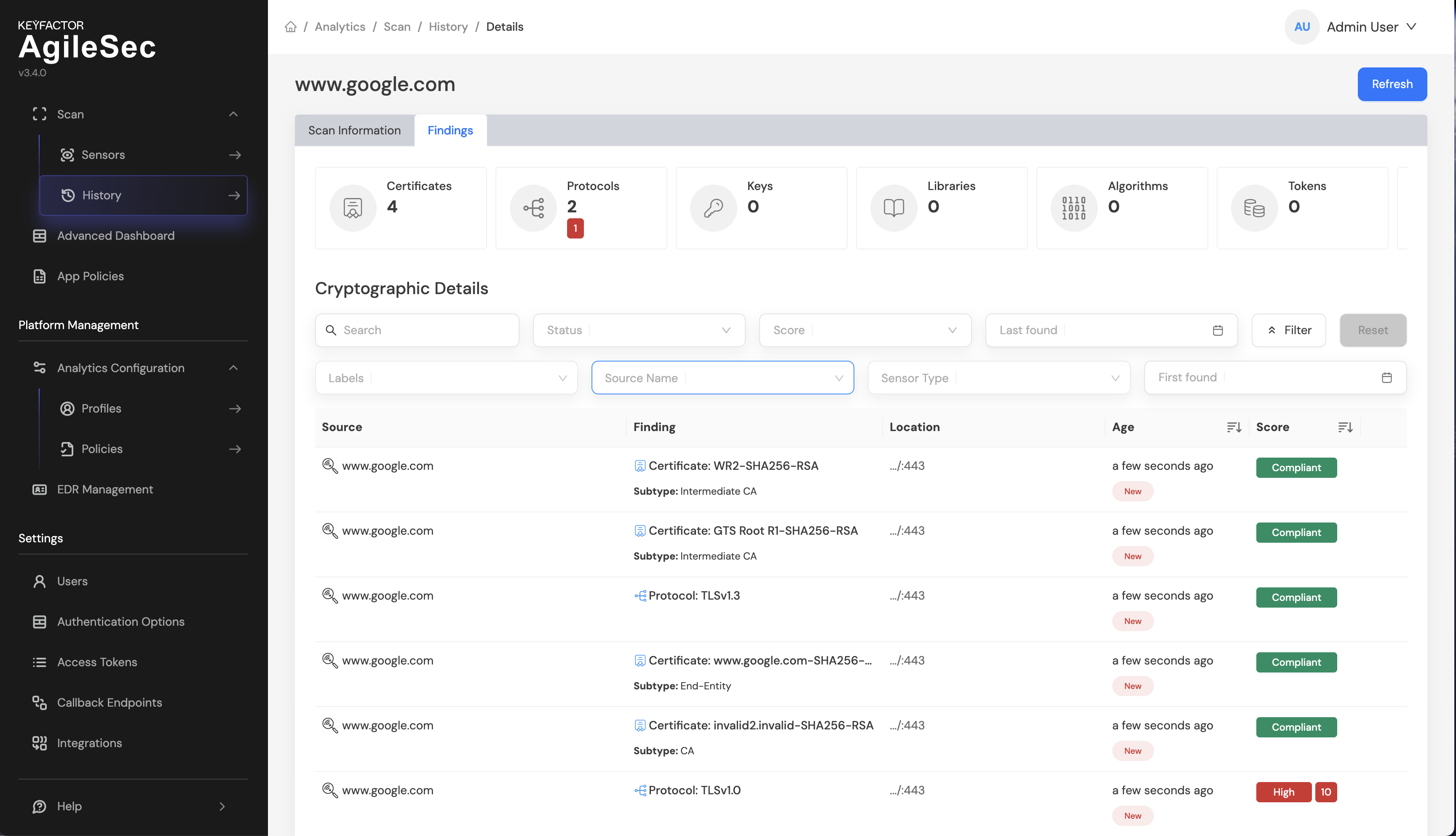

Step 2: On network scan page, enter an HTTPS URL to scan (for example: https://www.google.com), then click Scanto start the scan.

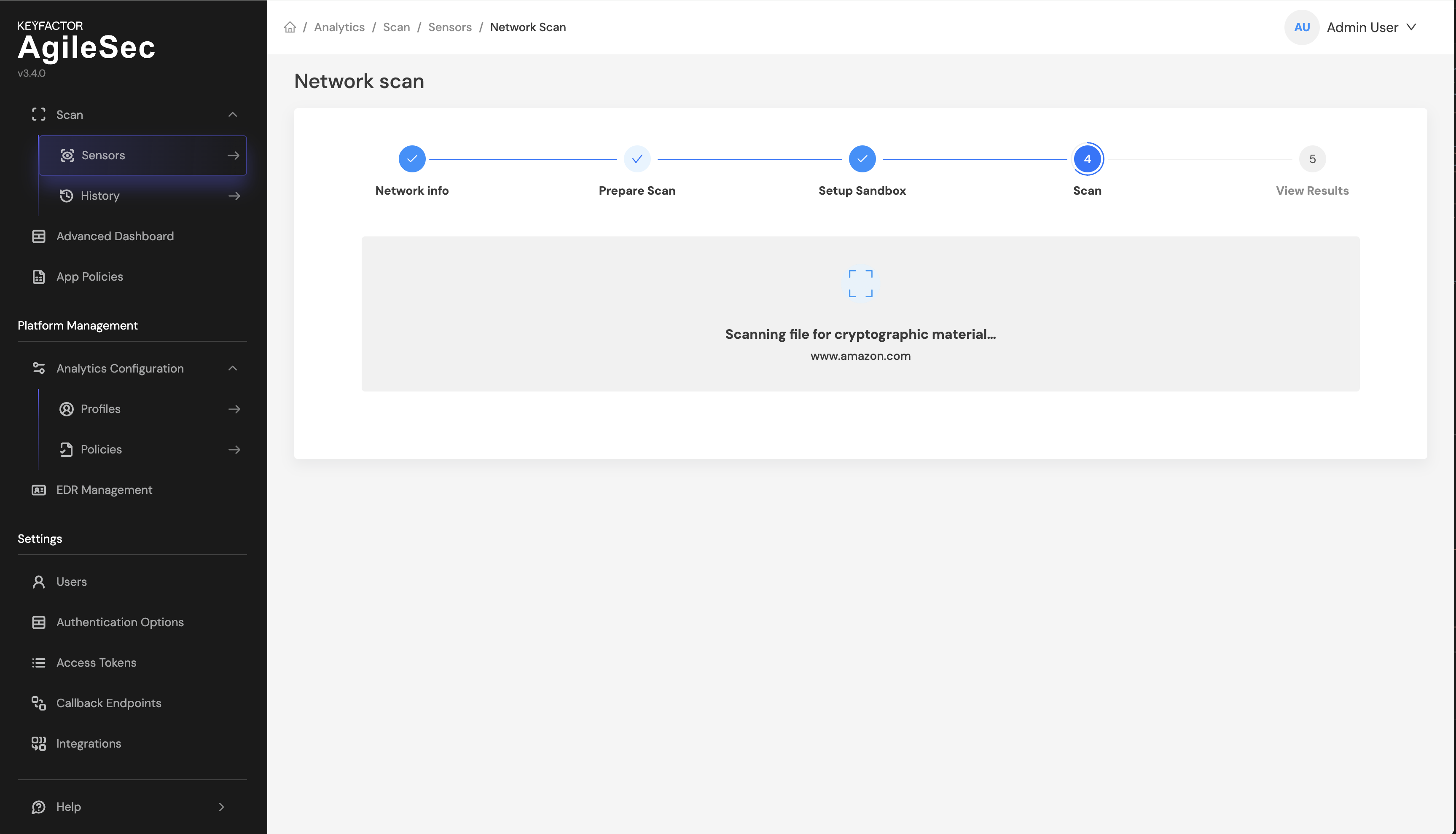

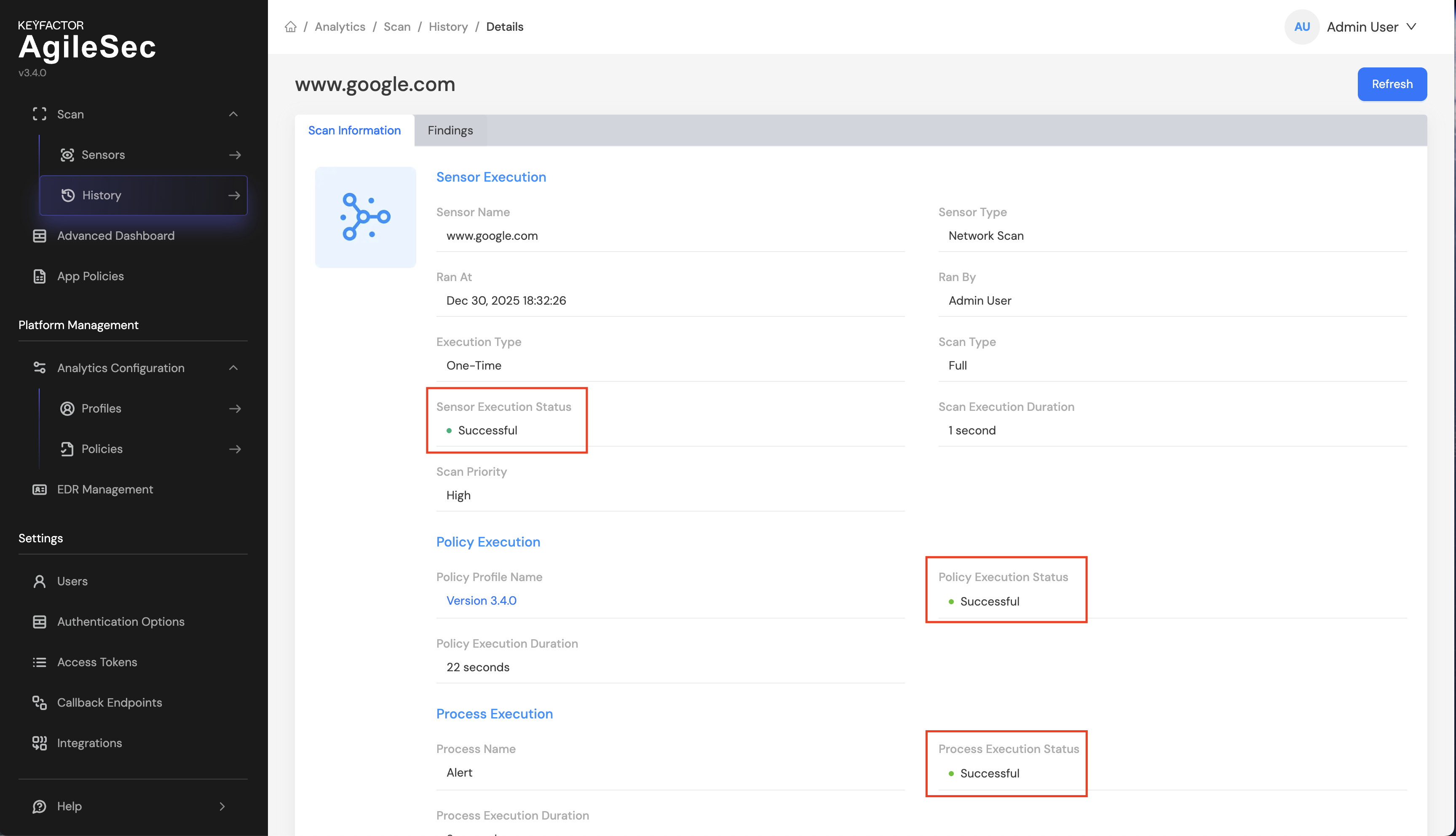

Step 3: While the scan is running, you will see a screen similar to following:

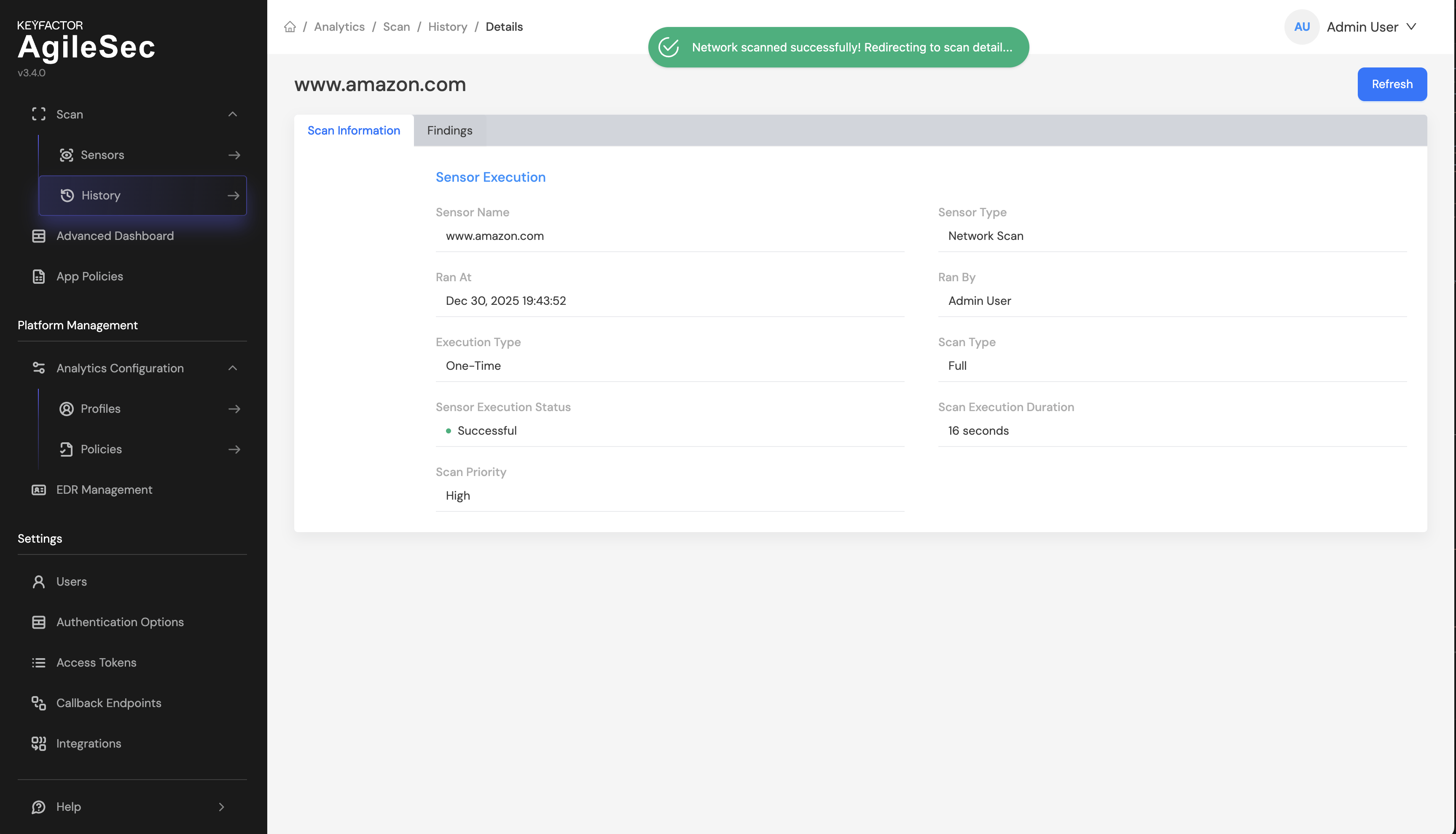

Step 4: Once scan is completed successfully you will see a screen similar to following:

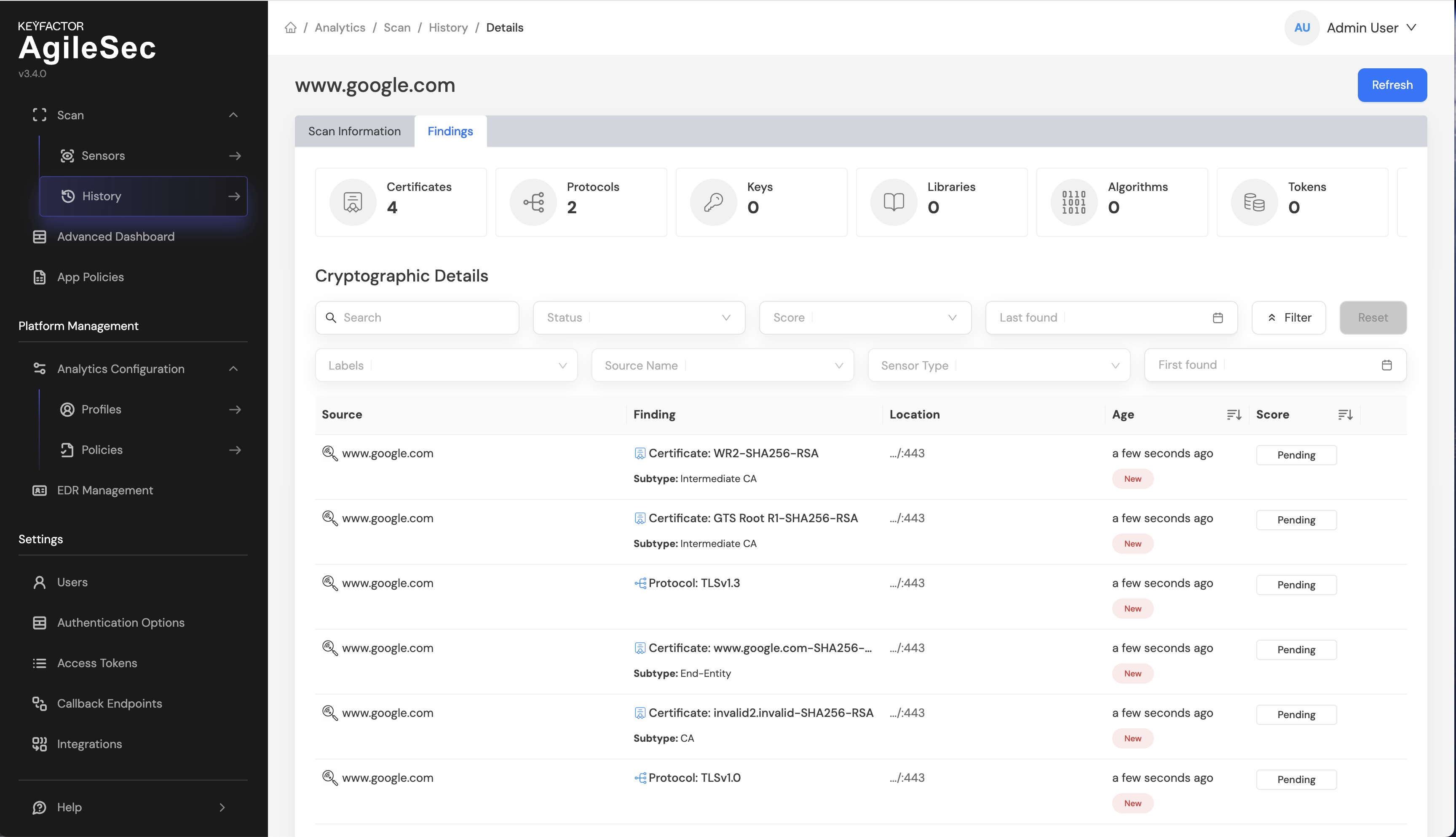

Step 5: At this point, the scan has completed and the pipeline is waiting for the policy execution to finish. Policy execution can take up to 45 seconds. Until policies run, all findings will show a Pending status under the Findings tab:

Step 6: Once policies have run, the Score column show a risk score instead of Pending, as shown in the screenshot below:

Step 7: Shortly after policies run successfully, a backend process performs additional analysis on the findings. Once this process completes, you will see Successful statuses as shown in the screenshot below. This confirms the platform is working as expected.

Managing Services

After installation, you can manage services using the unified service manager script at ./scripts/manage.sh

The manage.sh script provides a centralized way to manage all platform services:

cd /scripts ./manage.sh [options] [services...]

Actions

Action | Description |

|---|---|

start | Start services |

stop | Stop services |

restart | Stop and then start services |

reload | Reload service configuration where supported |

status | Check status of services |

list | List available services |

help | Display help message |

Options

Option | Description |

|---|---|

-d, --debug | Enable debug mode (show service output in console) |

-h, --help | Display help message and exit |

Available Services

Service | Description |

|---|---|

haproxy | HAProxy Load Balancer |

opensearch | OpenSearch Search Engine |

opensearch-dashboards | OpenSearch Dashboards |

mongodb | MongoDB Server |

kafka | Kafka Server |

td-agent | Fluentd Data Collector |

webui | Web UI Microservice |

api | Web API Microservice |

sm | Security Manager Microservice |

analytics-manager | Analytics Manager Microservice |

ingestion | Ingestion Microservice |

scheduler | Scheduler Microservice |

FluentD | Fluentd Data Collector |

If no specific services are specified, the action will be applied to all installed services.

Usage Examples

To start all services:

./manage.sh startTo start only OpenSearch with debug output

./manage.sh start -d opensearchTo start multiple specific services

./manage.sh start opensearch opensearch-dashboardsThe script automatically resolves dependencies, starting OpenSearch first (as it's a dependency for other services) before starting any dependent services.

to stop all services

# Stop all installed services

./manage.sh stopTo stop only specific services

./manage.sh stop haproxy td-agentThe script stops services in reverse dependency order to ensure a clean shutdown.

To Restart all installed services:

# Restart all installed services

./manage.sh restartTo restart only OpenSearch

./manage.sh restart opensearchTo reload configuration:

./manage.sh reload haproxyTo list status of all services

./manage.sh statusTo check status of specific services:

./manage.sh status opensearch td-agentTo list all available services:

./manage.sh list

If HAProxy is configured to use a privileged port (< 1000), you'll need root privileges to start or stop it. The script will display the appropriate command to run with sudo.

Post Installation Configuration

SAML Setup

For detailed instructions on various SSO integration options, see Authentication and Access Control.

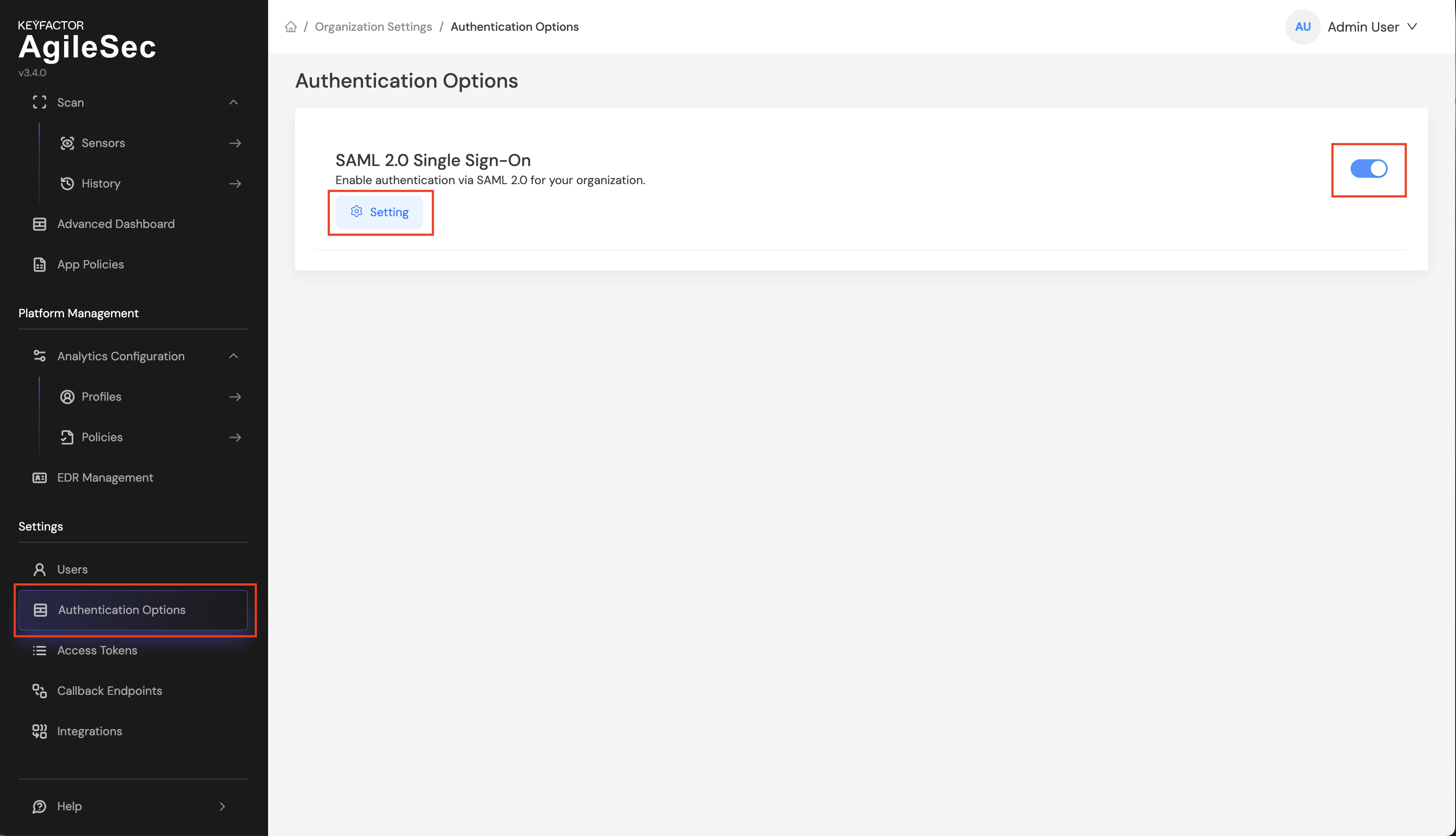

Only users with the Platform Admin privilege role for the organization can edit the SAML 2.0 configuration. To access the SAML setup page, do the following:

Step 1: Go to Settings -> Authentication Options

Step 2: Turn on SAML 2.0 Single Sign-On

Step3: Open Settings for SAML 2.0 Configuration, then configure the SAML settings for your environment. Details for each configurable option are provided in the next section.

Configuration Options

Service Provider Information

Field | Description | Azure AD SSO configuration equivalent field |

|---|---|---|

Organization SAML ID | Organization's unique SAML internal identifier. | N/A |

Callback URL | The URL where the IdP should redirect/post to after authentication. | Reply URL (Assertion Consumer Service URL) |

SP Entity ID | Service Provider Entity ID | Identifier (Entity ID) |

Authorization Settings

Field | Description | Azure AD SSO configuration equivalent field |

|---|---|---|

Assertions Signed | Indicates if SAML assertions should be signed. | SAML Signing Certificate, Signing Option includes "Sign SAML assertion" |

Authentication Response Signed | Indicates if the SAML authentication response should be signed. | SAML Signing Certificate, Signing Option includes "Sign SAML response" |

Only SHA256 signing algorithm is supported.

Identity Provider Configuration

Field | Description | Azure AD SSO configuration equivalent field |

|---|---|---|

IDP Metadata | Raw XML metadata for the Identity Provider. | SAML Certificates / Federation Metadata XML |

Metadata URL | URL to fetch the IdP metadata. | SAML Certificates / App Federation Metadata Url |

Required to select one of the options.

Custom Attribute Configuration

Field | Description | Azure AD SSO configuration equivalent field |

|---|---|---|

Claim Name | Case-sensitive claim name provided by the SAML IdP used for role mapping. | Attributes & Claims. |

Role Mapping Configuration

Field | Description | Note |

|---|---|---|

IDP role | Role assigned by IDP | Mapped group claim name's value |

ISG role | Mapped AgileSec role to assigned role | AgileSec role you want to assign to that group |

Troubleshooting

If you encounter issues during installation or operation:

Installation Issues

Check the console output for specific error messages.

Verify that all prerequisites are met.

Ensure all certificate files are correctly placed and have proper permissions and filename.

Check the

.envfile to make sure following are correct:private_ipanalytics_hostnameanalytics_domainanalytics_portcluster_frontend_node_ipscluster_backend_node_ips

Make sure analytics fqdn

<analytics_hostname>.<analytics_domain>is reachable on<analytics_port>Verify network connectivity between nodes in a multi-node installation.

Service Issues

Check service logs in the

<installation_path>/logsdirectory.Verify port availability using

netstatorsscommands.Ensure proper certificate permissions and ownership.

Check disk space with

df -h.Verify memory availability with

free -h.

Common Errors

Certificate Issues: Ensure all certificate DNs and CNs match specs from certificates requirements section.

Port Conflicts: Verify no other services are using the required ports.

Permission Denied: Check file and directory permissions.

Memory Errors: Verify you have sufficient memory available (minimum 8GB recommended).

.png)