Node Cluster Replication Configuration

The following covers node cluster replication configuration.

Accessing Nodes

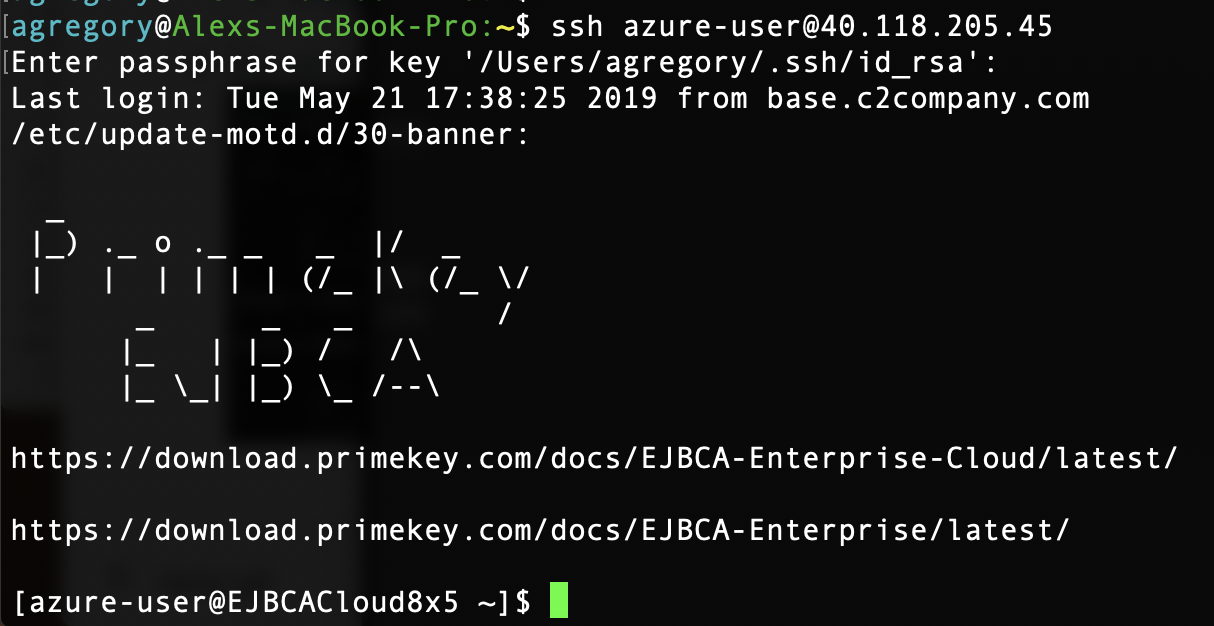

To access the nodes:

- If you selected ssh key access when procuring your instance, use the SSH key that you selected when procuring the instance. If you chose username and password, use the combination you chose at launch time to SSH into the EJBCA Cloud instance. For example, for azure-user with an IP address of 40.118.253.3 use the following: BASH

# ssh azure-user@40.118.253.3 - Run the command

sudo suto get elevated privileges:BASH# sudo su - Change to the

/opt/PrimeKey/supportdirectory.

Replication Configuration on Node 1

Designate the node to start with. This will be the node that is used to cluster its data to the other nodes. When accessing the databases on the nodes for the first time use their instance ID as the password. Once the data is replicated to the remaining nodes in the cluster from Node 1, they will all use the same password as Node 1.

The MySQL configuration file is located at /etc/my.cnf.d/server.cnf. This file already has much of the configuration needed to get a cluster working. Change the cluster name as required by editing the wsrep_cluster_name="galera" value to the desired value. In this example, "ejbca_cluster" is used. This value should be the same on all nodes in the cluster.

Create a backup of the system by running:

# /opt/PrimeKey/support/system_backup.sh For more information on backing up EJBCA Enterprise Cloud instances, refer to the Backup Guide.

Run the following commands to ensure that the remote systems and localhost can write to the database. Change <PASSWORD> to the desired password and the "10.4.0.%" to be valid for the subnet used. The "%" character is a wildcard and can be used if desired. For example, if the internal address space is "10.10.1.0/24" then "10.10.1.%" could be used.

![]() If this configuration is being done in more than one VPC, change the subnet space or IP address for each subnet with the commands below. Three separate statements for each of the specific IP addresses for each node in the cluster can be created for tighter security if desired.

If this configuration is being done in more than one VPC, change the subnet space or IP address for each subnet with the commands below. Three separate statements for each of the specific IP addresses for each node in the cluster can be created for tighter security if desired.

# mysql -u root --password=<PASSWORD> -e "GRANT RELOAD, LOCK TABLES, REPLICATION CLIENT on *.* to 'repl_user'@'10.4.0.%' identified by '<PASSWORD>';"

# NEXT LINE ONLY NEEDED WHEN USING ADDITIONAL NETWORKS:

# mysql -u root --password=<PASSWORD> -e "GRANT RELOAD, LOCK TABLES, REPLICATION CLIENT on *.* to 'repl_user'@'10.2.0.%' identified by '<PASSWORD>';"Edit the /etc/my.cnf.d/server.cnf file and look for the [galera] section, under the comment “# Galera Cluster Configuration”. Add the following lines to the section, changing the two “wsrep_cluster_address” IP addresses to the Node 2 and Node 3 IP addresses in the cluster, the value for “wsrep_node_name” for Node 1 and the “wsrep_node_address” to be the IP address for Node 1 if not already set:

wsrep_cluster_name=ejbca_cluster

wsrep_cluster_address="gcomm://10.4.0.5,10.2.0.4"

wsrep_node_name=EJBCANode1

wsrep_node_address="10.4.0.4"Replication Configuration on Node 2

SSH into Node 2 and perform a backup:

# /opt/PrimeKey/support/system_backup.shStop mysql on this node:

# service mysql stopEdit the /etc/my.cnf.d/server.cnf file and look for the [galera] section, under the comment “# Galera Cluster Configuration”. Add the following lines to the section, changing the two “wsrep_cluster_address” IP addresses to the Node 1 and Node 3 IP addresses in the cluster, the value for “wsrep_node_name” for Node 2 and the “wsrep_node_address” to be the IP address for Node 2 if not already set. Also change the wsrep_sst_auth to be the password from node 1.

[mysql]

wsrep_cluster_name=ejbca_cluster

wsrep_cluster_address="gcomm://10.4.0.4,10.2.0.4"

wsrep_node_name=EJBCANode2

wsrep_node_address="10.4.0.5"

wsrep_sst_auth=repl_user:<PASSWORD>WildFly Configuration for Node 2

Edit the Wildfly datasource.properties file and update the password to the password used in the database:

# vim /opt/PrimeKey/wildfly_config/datasource.properties ![]() Change DATABASE_PASSWORD= <PASSWORD> to the password of the main node that replicated the data. In this case this is the password from Node 1.

Change DATABASE_PASSWORD= <PASSWORD> to the password of the main node that replicated the data. In this case this is the password from Node 1.

Replication Configuration on Node 3

SSH into Node 3 and perform a backup:

# /opt/PrimeKey/support/system_backup.shStop mysql on this node:

# service mysql stopEdit the /etc/my.cnf.d/server.cnf file and look for the [galera] section, under the comment “# Galera Cluster Configuration”. Add the following lines to the section, changing the two “wsrep_cluster_address” IP addresses to the Node 1 and Node 2 IP addresses in the cluster, the value for “wsrep_node_name” for Node 3 and the “wsrep_node_address” to be the IP address for Node 3 if not already set:

[mysqld]

wsrep_cluster_name=ejbca_cluster

wsrep_cluster_address="gcomm://10.4.0.4,10.4.0.5"

wsrep_node_name=EJBCANode3

wsrep_node_address="10.2.0.4"

wsrep_sst_auth=repl_user:<PASSWORD>WildFly Configuration for Node 3

Edit the Wildfly datasource.properties file and update the password to the password used in the database:

# vim /opt/PrimeKey/wildfly_config/datasource.properties![]() Change DATABASE_PASSWORD= <PASSWORD> to the password of the main node that replicated the data. In this case this is the password from Node 1.

Change DATABASE_PASSWORD= <PASSWORD> to the password of the main node that replicated the data. In this case this is the password from Node 1.

.png)